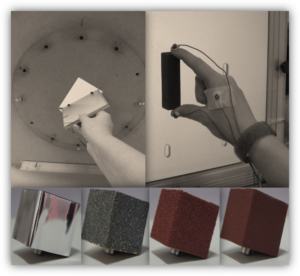

The GRaSPER Lab at the University of Aberdeen

Grasping, Reaching and Spatial Perception Experimental Research

The aim of our research is to understand how we use perceptual processes for action planning and control and how our actions are moderated by visual and cognitive factors. Furthermore, we are interested in the effects of physical and affective material properties on behavioural intentions. Our studies and experiments are designed to gain insights into the complex interplay between the perceptual, cognitive, and motor processing systems.

We primarily apply experimental behavioural methods (psychophysics) but also occasionally conduct neuropsychological studies examining the visuomotor behaviour of patients who suffer from specific perceptual and spatial impairments (such as hemianopia and visual neglect).